Need a PRNG? Use a CSPRNG

This post is about Random Number Generators, RNGs for short.

We often want computers to behave randomly, for various reasons:

- Cryptography. Encryption keys need to be random to prevent attackers from guessing them.

- Monte Carlo simulations. We want to calculate the statistics of some random phenomenon by random sampling.

- Monte Carlo algorithms. Certain computational problems are easier to solve with high probability using randomness than deterministically with certainty. Primality testing is an example.

- Las Vegas algorithms. These always produce the correct answer, but their efficiency depends on using randomness. Quicksort is an example.

- Machine learning. Neural network weights are initialized randomly, training uses random sampling.

- Games with randomness. An online backgammon server needs random dice.

- Video games. We want certain elements of the game to behave randomly.

- Art. Predictable patterns are boring.

There are several ways to generate random numbers. You can use a Hardware RNG, or you can use a Pseudorandom Number Generator, PRNG for short. There are several PRNG algorithms available. The question I will try to answer is: which one should you use?

I have already given away my answer is the title. You should always use a Cryptographically Secure PRNG, CSPRNG for short, and ignore all other PRNGs. There is no good reason to use anything else. I know it sounds simplistic, but I will explain why it is the only reasonable way to do it.

This advice goes somewhat against the common practice and the usual advice. What people typically say is: use a CSPRNG if you are doing cryptography and need security against adversarial attackers. For all other purposes pick among the “classic” non-cryptographic PRNGs. It sounds reasonable. After all, it’s in the name: CSPRNG stands for “cryptographically secure” PRNG. If you don’t need to worry about security, why bother doing that?

But I will argue that always using CSPRNGs is the only thing that really makes sense.

A motivating example

Consider the following problem in linear algebra:

What is the probability that a random matrix in (modulo 2) is full rank, i.e. invertible?

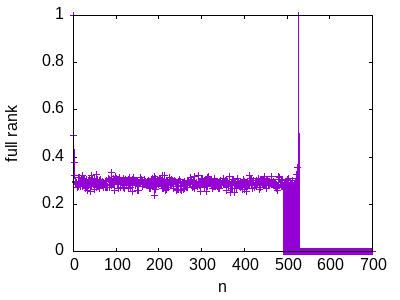

We can test this experimentally. I wrote a C++ program that calculates this for by sampling 1000 random matrices for each .

It looks like we get about 30% for , then we suddenly start flipping between 0% and 30% for , then we get 100% for , and then 0% for .

You can try running this code yourself and see if you get the same answers. This experiment is repeatable.

Such interesting results! We have some sort of phase transition around 500. You can easily imagine somebody writing a scientific paper based on an experiment similar to this.

But the effect is not real. The true answer is that the probability is:

I used the rand() function from the GNU C library to generate the random matrices. This PRNG uses a Lagged Fibonacci Generator. The results are an artifact of this particular PRNG. There are detectable dependencies between the pseudo-random bits generated by the generator which results in matrix rows being linearly dependent for large (and, surprisingly, always independent for ).

What should I have used instead? How can I know that if I use a different PRNG, I will get more trustworthy results?

I can’t if I use any old PRNG. But if I use a CSPRNG, then I know by definition of cryptographic security that one of two things will happen:

- Either the results will be statistically correct, or

- I will have broken the cryptographic security of that particular CSPRNG.

Both cases are pretty neat!

If, hypothetically, my results are statistically off with a CSPRNG, I will have just found a way to break cryptographic security of the cryptographic primitive used byt that CSPRNG. By definition, that’s what it means to break cryptographic security. That’s even better than learning about matrices!

That scenario is very unlikely in practice. A lot of people have deliberately tried and failed to break the security of standard CSPRNGs – that’s why we treat them as cryptographically secure. It’s unlikely that just messing around with matrices I have stumbled upon a way to break one without even really deliberately trying.

Hardware RNGs

Maybe we should just use true randomness rather than settling with pseudorandomness? There are hardware devices that generate random bits.

Some modern processors have them built in, for instance, modern x86-64 CPUs have the RDRAND instruction.

One could use /dev/random in Linux, or the online service random.org.

There are a few reasons PRNGs are usually preferred:

- Truly random bits are hard to obtain efficiently.

- It’s difficult to make them unbiased.

- With PRNGs, we can easily replay the whole random sequence without having to store all the bits.

In fact, for the first two reasons, the RDRAND instruction is implemented in hardware with a hybrid of a hardware generator and a CSPRNG. /dev/random also does this.

Hardware generators are still very important however. We need them to seed PRNGs. A PRNG takes a small truly random seed and uses it to generate a long sequence of pseudorandom bits.

Non-cryptographic PRNGs

There are several non-cryptographic PRNGs in common use:

- Lehmer generator. Available in C++ as

minstd_rand0andminstd_rand. - Linear congruential generator. Previously used by the

randfunction in the GNU C library. - Lagged Fibonacci generator. Currently used by the

randfunctions in the GNU C library. - Linear-feedback shift register.

- Mersenne Twister. Very popular. Available in C++ as

mt19937, the default PRNG used by the Pythonrandommodule. - PCG, Permuted Congruential Generator.

- Xorshift / Xoroshiro. A popular recent generator. Used by default in JavaScript engines in Chrome, Firefox, Safari.

I am arguing: these should never be used! They are weak generators. They all are easily predictable and have statistical weaknesses. Of course they do – if they didn’t, they would be considered CSPRNGs!

It is a bad state of affairs that they are provided as the default PRNG in various programming languages.

The Dark Art of choosing a weak PRNG

Donald Knuth in his classic books The Art of Computer Programming devotes a good part of Volume 2 just to this topic. He analyzes various parameters of Linear Congruential Generators and Lagged Fibonacci Generators and considers their strengths and weaknesses.

He never considers CSPRNGs at all. That’s because modern cryptographic primitives on which CSPRNGs are built didn’t exist yet when the books were written in the 1960s.

There are a lot of papers analyzing the pros and cons of various algorithms and their weak spots.

Sebastiano Vigna, one of the authors of xoroshiro, has written a paper about why one shouldn’t use the Mersenne Twister and has argued online about why PCG is flawed.

The arguments got rather heated when PCG’s author M.E. O’Neill wrote in defense of PCG.

My take: just use a CSPRNG and you will never have to worry about any of this. CSPRNGs don’t have any such weaknesses, by design! As long as their security claims hold up.

CSPRNGs

Cryptographically secure PRNGs are closely related to encryption. Any CSPRNG can be turned into a stream cipher, and vice-versa. What a stream cipher really is is a CSPRNG that you xor your message with in order to encrypt it.

To generate a CSPRNG from a block cipher, you can simply run the block cipher in counter mode. In other words, you process the numbers 0, 1, 2, 3, etc using a random encryption key, and that gives you the pseudo-random bits.

There are other ways to do this in order to get some extra protection when your key leaks but let’s not get into that because it’s not really relevant outside of cryptography.

Two common CSPRNGs are:

I recommend ChaCha20. It’s rather simple. It’s easy to implement from scratch in not that many lines of code. It doesn’t require special hardware support. AES uses special hardware instructions to be efficient.

The de-facto standard Rust library for random numbers, rand, uses ChaCha12, a reduced-round variant of ChaCha20, as its default PRNG.

What makes a good PRNG

A good PRNG generates a sequence that “looks random”. This is usually taken as “it passes as many various statistical tests as possible”. Statistical tests try to distinguish pseudo-random numbers from truly random numbers.

The definition of a CSPRNG is: given the first n bits, there is no efficient algorithm that will predict the next bit with success rate non-negligibly better than 50%.

This implies that a CSPRNG will pass all statistical tests that run in reasonable time.

Can you see the similarity between what makes a good PRNG and what makes a CSPRNG? A CSPRNG is the ultimate PRNG, by definition.

CSPRNGs are better

Suppose you are creating an online backgammon server. Backgammon uses dice. You need fair dice. What PRNG should you choose?

If you use a weak PRNG, players can relatively easily reverse engineer what is going on and predict future dice based on previous dice rolls. Clearly a bad situation all around!

How about if you are running a scientific Monte Carlo simulation. We already saw an example with matrices. You can’t trust the results if you use a weak PRNG!

Can you trust the results if you use a CSPRNG? Sort of.

Hypothetically you could get biased results because there is no proof that any particular CSPRNG really is secure. But if you do see biased results, you will have accidentally broken the security of that CSPRNG, by the very definition of cryptographic security. You would be able to read encrypted messages by exploiting this bias.

So for practical purposes: yes, you can trust the results obtained by CSPRNG.

What if you’re just running some Las Vegas algorithm such as quicksort? Do you really need a CSPRNG?

Yes! The analysis of quicksort assumes that the numbers are truly random. If they can be distinguished from truly random, that analysis doesn’t hold any more. Your quicksort might become quadratically slow for certain types of data. You never know. Why risk it?

With CSPRNG, if you notice that your quicksort becomes quadratically slow, again you would have accidentally broken the cryptographic security of that PRNG.

What if you’re just building a video game? Do you really care about the quality of the random numbers used for the behavior of characters in the game? Well, in this case maybe not. But then, why bother even thinking about this? Just plug in a CSPRNG and forget about it. There is no harm.

What about performance?

By this point many of you are probably thinking: what about performance? Aren’t CSPRNGs less efficient than simple weak PRNGs?

Let’s look at some numbers.

If you use a weak PRNGs such as Xoshiro256PlusPlus, you are getting 7 GB/s.

If you use ChaCha20, you are getting 1.8 GB / s. About 4x less.

This seems like a reasonable argument for weak PRNGs: 4x speed-up is a lot!

But that would only be true if generating random bits was the hot spot, the bottleneck of your program. It never is in practice.

1.8 GB / s is 14 billion random bits per second. Do you really need 14 billion random bits per second in your video game, or in your Monte Carlo simulation? Probably not. Probably many orders of magnitude less. Whatever you do with those random bits most likely takes orders of magnitude more effort than just generating those bits.

And if you ever do need billions or trillions of bits, you probably care a lot about the quality of those bits. Any bias will show with such a large sample.

“But I don’t care about quality”

So you might say: in my program I don’t care whether the numbers are really all that random, so shouldn’t I just use some weak PRNG?

I have two answers to this.

The first answer is: even if you don’t care, there is no harm from using CSPRNG. So why not just always use it?

My second answer is: if you really don’t care, why not just do this?

int getRandomNumber() {

return 4;

}

Or more realistically, if you only care that the numbers don’t repeat too often, why not use a simple counter that returns 0, 1, 2, 3, …?

If you say “that’s not random enough”, that indicates that you do care about quality, and so you should just use a CSPRNG and be done.

“My favorite language doesn’t provide CSPRNGs”

That’s the only reasonable argument for using a weaker PRNG. I hope this situation changes in the future.

You can still use a third-party library. Or you can just implement ChaCha20 yourself. It’s not that complicated. Wikipedia has an implementation of the core algorithm in 31 lines of C code.

Weak PRNGs are poor man’s wanna-be CSPRNGs

If you look at how a weak PRNG such as Xoroshiro128** is implemented, you will see a bunch of xors, bit shifts, and multiplications.

If you look at how ChaCha20 is implemented, you will see a bunch of xors and bit shifts and additions. There are just more iterations of them.

The way I see it: weak PRNGs are wanna-be CSPRNGs that don’t quite get there. They do the same kinds of things that CSPRNGs do, they just don’t quite get the job done fully. They don’t mix the bits enough that the output becomes indistinguishable from truly random.

Just use the real thing!

Summary

I hope that this convincingly argues that CSPRNGs are the way to go. We should all just stop using weaker PRNGs altogether.

It is a shame that major programming languages provide weak PRNGs as the default in their standard libraries.

Rust’s third-party crate rand that is the de-facto Rust standard for PRNGs is a notable exception: it uses a CSPRNG as the default generator.

I hope everybody else follows suit and does the same.